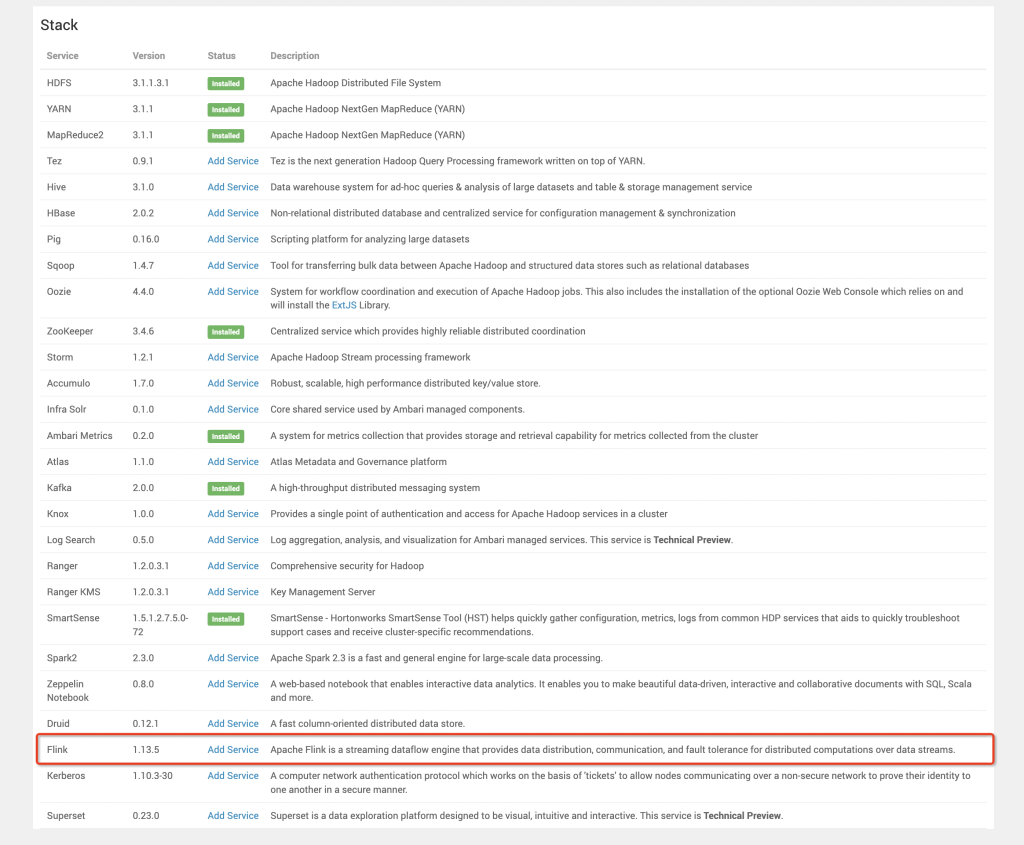

HDP配置、组件安装

注:由于我们使用yarn-application的方式运行Flink(在yarn集群中运行Flink,实际架构中不由ambari-agent监控和管理),可不需要添加Flink到ambari中,此处的Flink仅为示例,方便组件的版本管理、下载以及调试

将下载的包放进http://127.0.0.1/ambari/ (之前的步骤已经完成)

下载github的service组件包放入stack中(这里我们使用了3.1,将下面的变量$VERSION替换成3.1即可)

git clone https://github.com/abajwa-hw/ambari-flink-service.git /var/lib/ambari-server/resources/stacks/HDP/$VERSION/services/FLINK编辑 metainfo.xml 将安装的版本修改为 1.13.5(考虑到flink-cdc组件的问题,我们使用flink 1.13.x)

cd /var/lib/ambari-server/resources/stacks/HDP/3.1/services/FLINK/

vim metainfo.xml

<displayName>Flink</displayName>

<comment>Apache Flink is a streaming dataflow.。。。。.</comment>

<version>1.13.5</version>配置Flink组件的JAVA_HOME

cd /var/lib/ambari-server/resources/stacks/HDP/3.1/services/FLINK/configuration

vim flink-env.xml

env.java.home: /usr/local/java/ #改为我们之前安装的的java路径编辑flink-ambari-config.xml修改下载地址为第一步创建的网络路径

cd /var/lib/ambari-server/resources/stacks/HDP/3.1/services/FLINK/configuration

vim flink-ambari-config.xml

<property>

<name>flink_download_url</name>

<!--<value>http://www.us.apache.org/dist/flink/flink-1.9.1/flink-1.9.1-bin-scala_2.11.tgz</value>-->

<value>http://127.0.0.1/ambari/flink-1.13.5-bin-scala_2.11.tgz</value>

<description>Snapshot download location. Downloaded when setup_prebuilt is true</description>

</property>

<property>

<name>flink_hadoop_shaded_jar</name>

<!--<value>https://repo.maven.apache.org/maven2/org/apache/flink/flink-shaded-hadoop-2-uber/2.6.5-7.0/flink-shaded-hadoop-2-uber-2.6.5-7.0.jar</value>-->

<value>http://127.0.0.1/ambari/flink-shaded-hadoop-2-uber-2.8.3-10.0.jar</value>

<description>Flink shaded hadoop jar download location. Downloaded when setup_prebuilt is true</description>

</property>创建用户和组

groupadd flink

useradd -d /home/flink -g flink flink最后重启一下ambari-server即可完成组件的添加

service ambari-server restart此时我们去查看ambari后台stack and Versions,就会找到添加的Flink组件,Add service根据提示完成操作即可